3.3 xarray Groupby#

Lesson Content

groupby

groupby bins

Context#

Yesterday we began to explore working with data in xarray. Today we are going to dig into that even deeper with a concept called groupby. Groupby is going to allow us to split our data up into different categories and analyze them based on those categories. It sounds a bit abstract right now, but just wait - it’s powerful!

import xarray as xr

# sst = xr.open_dataset("https://www.ncei.noaa.gov/thredds/dodsC/OisstBase/NetCDF/V2.1/AVHRR/198210/oisst-avhrr-v02r01.19821007.nc")

sst = xr.open_dataset('./data/oisst-avhrr-v02r01.20220304.nc')

sst = sst['sst'].squeeze(dim='zlev', drop=True)

Groupby#

While we have lots of individual gridpoints in our dataset, sometimes we don’t care about each individual reading. Instead we probably care about the aggregate of a specific group of readings.

For example:

Given the average temperature of every county in the US, what is the average temperature in each state?

Given a list of the opening dates of every Chuck E Cheese stores, how many Chuck E Cheeses were opened each year? 🧀

In xarray we answer questions like that that with groupby.

Breaking groupby into conceptual parts#

In addition to the dataframe, there are three main parts to a groupby:

Which variable we want to group together

How we want to group

The variable we want to see in the end

Without getting into syntax yet we can start by identifiying these in our two example questions.

Given the average temperature of every county in the US, what is the average temperature in each state?

Which variable to group together? -> We want to group counties into states

How do we want to group? -> Take the average

What variable do we want to look at? Temperature

Given a list of the opening dates of every Chuck E Cheese stores, how many Chuck E Cheeses were opened each year?

Which variable to group together? -> We want to group individual days into years

How do we want to group? -> Count them

What variable do we want to look at? Number of stores

📝 Check your understanding

Identify each of three main groupby parts in the following scenario:

Given the hourly temperatures for a location over the course of a month, what were the daily highs?

Which variable to group together?

How do we want to group?

What variable do we want to look at?

groupby syntax#

We can take these groupby concepts and translate them into syntax. The first two parts (which variable to group & how do we want to group) are required for pandas. The third one is optional.

Starting with just the two required variables, the general syntax is:

DATAFRAME.groupby(WHICH_GROUP).AGGREGATION()

Words in all capitals are variables. We’ll go into each part a little more below.

# We only have 1 month, so this doesn't fly here maybe on homework?

sst.groupby('time.month').mean()

<xarray.DataArray 'sst' (month: 1, lat: 720, lon: 1440)> Size: 4MB

array([[[ nan, nan, nan, ..., nan, nan, nan],

[ nan, nan, nan, ..., nan, nan, nan],

[ nan, nan, nan, ..., nan, nan, nan],

...,

[-1.73, -1.74, -1.75, ..., -1.76, -1.75, -1.73],

[-1.74, -1.77, -1.78, ..., -1.78, -1.77, -1.74],

[-1.8 , -1.8 , -1.8 , ..., -1.8 , -1.8 , -1.8 ]]],

shape=(1, 720, 1440), dtype=float32)

Coordinates:

* lat (lat) float32 3kB -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

* lon (lon) float32 6kB 0.125 0.375 0.625 0.875 ... 359.4 359.6 359.9

* month (month) int64 8B 3

Attributes:

long_name: Daily sea surface temperature

units: Celsius

valid_min: -300

valid_max: 4500'WHICH_GROUP'#

This can be any of the dimensions of your dataset. In physical oceanography, for example, it is common to group by latitude, so that you can see how a variable changes as you move closer to or further away from the equator.

sst.groupby('lat').mean(...)

<xarray.DataArray 'sst' (lat: 720)> Size: 3kB

array([ nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, nan, nan,

nan, nan, -1.60576057e+00, -1.60936546e+00,

-1.59555542e+00, -1.56879270e+00, -1.53598309e+00, -1.50997424e+00,

-1.49665833e+00, -1.47572505e+00, -1.44060051e+00, -1.40049088e+00,

-1.37327409e+00, -1.34686565e+00, -1.33067799e+00, -1.33130527e+00,

-1.30808103e+00, -1.27450454e+00, -1.23564434e+00, -1.22612131e+00,

-1.22652245e+00, -1.21986055e+00, -1.21165824e+00, -1.20017111e+00,

-1.18722463e+00, -1.18862689e+00, -1.21300006e+00, -1.22864592e+00,

-1.25179827e+00, -1.24926305e+00, -1.23627651e+00, -1.21719289e+00,

-1.18193030e+00, -1.15649366e+00, -1.12543058e+00, -1.08391201e+00,

...

8.32177103e-02, 6.03025919e-03, -9.51826572e-02, -1.70496613e-01,

-2.35420361e-01, -2.81060517e-01, -3.25153381e-01, -3.84170085e-01,

-4.37258244e-01, -4.73952502e-01, -5.23441434e-01, -6.37329757e-01,

-7.34746337e-01, -8.06852460e-01, -8.51410925e-01, -8.92525196e-01,

-9.19440627e-01, -9.83572781e-01, -1.04641449e+00, -1.09051383e+00,

-1.15335429e+00, -1.21488237e+00, -1.27574754e+00, -1.31679618e+00,

-1.36032736e+00, -1.38394511e+00, -1.41072977e+00, -1.43635023e+00,

-1.44863868e+00, -1.46660531e+00, -1.50196135e+00, -1.53681326e+00,

-1.57403004e+00, -1.60348368e+00, -1.63291144e+00, -1.65726089e+00,

-1.66841388e+00, -1.67372537e+00, -1.68312669e+00, -1.69431043e+00,

-1.70083857e+00, -1.71191311e+00, -1.71884120e+00, -1.72075558e+00,

-1.71685088e+00, -1.71835732e+00, -1.72196531e+00, -1.72431743e+00,

-1.72588181e+00, -1.72574711e+00, -1.72516668e+00, -1.72440970e+00,

-1.72136807e+00, -1.71859705e+00, -1.71676397e+00, -1.71635401e+00,

-1.71670127e+00, -1.71668041e+00, -1.71719444e+00, -1.71887493e+00,

-1.72009718e+00, -1.72070146e+00, -1.71927083e+00, -1.71474302e+00,

-1.70872223e+00, -1.70366669e+00, -1.70378482e+00, -1.70760393e+00,

-1.71209717e+00, -1.71631944e+00, -1.71732640e+00, -1.71518052e+00,

-1.71087492e+00, -1.70659006e+00, -1.70940280e+00, -1.67388880e+00],

dtype=float32)

Coordinates:

* lat (lat) float32 3kB -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

Attributes:

long_name: Daily sea surface temperature

units: Celsius

valid_min: -300

valid_max: 4500AGGREGATION#

The goal with each of the groups of data is to end up with a single value for the things in that group. To tell xarray how to gather the datapoints together we specify which function we would like it to use. Any of the aggregation functions we talked about at the beginning of the lesson work for this!

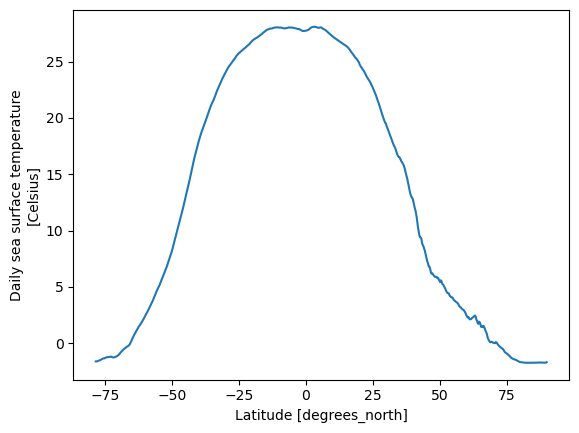

sst.groupby('lat').mean(...).plot()

[<matplotlib.lines.Line2D at 0x7f2e0ddd5590>]

What do we see? Hot water near the equator and chilly water near the poles.

Note

The ellipses ... inside the .mean() tell xarray to take the mean over all of the remaining axis. You wouldn’t have to do that - you may instead want to take the mean over just the latitude and keep the time resolution. It’s quite common, though, to want to aggregate over all remaining axis.

time dimension#

If your data has a time dimension and it is formatted as a datetime object you can take advantage of some slick grouping capabilities. For example, you can group by a time group like 'time.month', which will grab all make 12 groups for you, putting all the data from each month into its own group.

# We only have 1 month, so this doesn't fly here maybe on homework?

sst.groupby('time.month').mean()

<xarray.DataArray 'sst' (month: 1, lat: 720, lon: 1440)> Size: 4MB

array([[[ nan, nan, nan, ..., nan, nan, nan],

[ nan, nan, nan, ..., nan, nan, nan],

[ nan, nan, nan, ..., nan, nan, nan],

...,

[-1.73, -1.74, -1.75, ..., -1.76, -1.75, -1.73],

[-1.74, -1.77, -1.78, ..., -1.78, -1.77, -1.74],

[-1.8 , -1.8 , -1.8 , ..., -1.8 , -1.8 , -1.8 ]]],

shape=(1, 720, 1440), dtype=float32)

Coordinates:

* lat (lat) float32 3kB -89.88 -89.62 -89.38 -89.12 ... 89.38 89.62 89.88

* lon (lon) float32 6kB 0.125 0.375 0.625 0.875 ... 359.4 359.6 359.9

* month (month) int64 8B 3

Attributes:

long_name: Daily sea surface temperature

units: Celsius

valid_min: -300

valid_max: 4500groupby bins#

Breaking down the process#

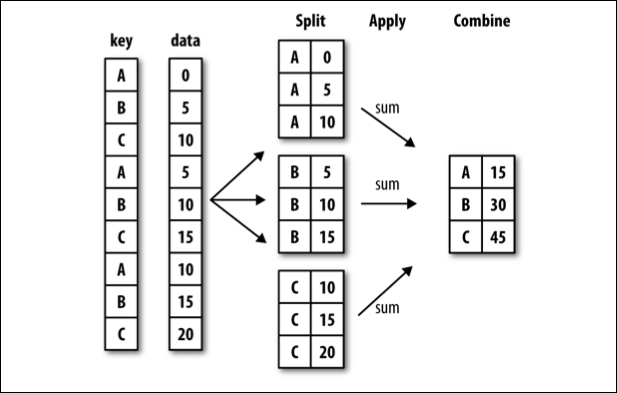

There is a lot that happens in a single step with groupby and it can be a lot to take in. One way to mentally situate this process is to think about split-apply-combine.

split-apply-combine breaks down the groupby process into those three steps:

SPLIT the full data set into groups. Split is related to the question Which variable to group together?

APPLY the aggregation function to the individual groups. Apply is related to the question How do we want to group?

COMBINE the aggregated data into a new dataframe